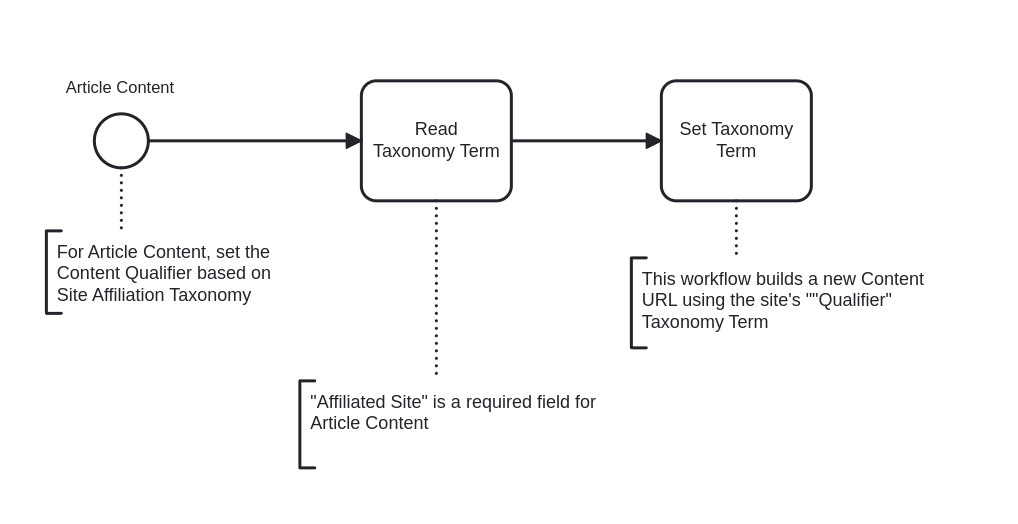

Drupal ECA sets content context path based on the "Affiliated Site" assigned. An Article would receive one of three paths applied in our example:

dangercactus.com -> /spines

gluebox.com -> /design

snowmaid.com -> /camp

Apache Setup Notes

<VirtualHost *:80>

ServerName maindomain.com

ServerAlias www.maindomain.com

RewriteEngine On

# Redirect /design/* to domain1.com

RewriteCond %{REQUEST_URI} ^/design/

RewriteRule ^(.*)$ http://domain1.com$1 [R=301,L]

# Redirect /style/* to domain2.com

RewriteCond %{REQUEST_URI} ^/style/

RewriteRule ^(.*)$ http://domain2.com$1 [R=301,L]

# Other configurations...

</VirtualHost>

Repeat the process for the other domains if necessary, adjusting the domain names and paths as required.

Apply the changes by restarting Apache

2. Add Canonical Tags Per Domain

Identify Content and Applicable URLs:

Determine the correct, domain-specific URL for each piece of content on your site.

Update Drupal Templates:

Edit your Drupal theme templates to include a canonical link element in the <head> section of your HTML. Drupal 10 templates can use the {{ page }} variable or other methods to dynamically insert the correct URL based on the content being viewed.

Example:

html

Copy code

<link rel="canonical" href="{{ canonical_url }}" />

Ensure {{ canonical_url }} is replaced with the dynamic URL that reflects the correct domain for the content.

Use Drupal SEO Modules:

Use Drupal modules like Metatag or SEO Checklist to help manage canonical URLs and other SEO-related tags across your site.

3. Configure robots.txt Per Domain

Create Custom robots.txt for Each Domain:

Write a custom robots.txt file for each domain, specifying which paths should be allowed or disallowed for crawling. Place these files in the root directory of your Drupal installation or use Drupal's internal mechanisms to serve different robots.txt files per domain.

Implement Domain-Specific robots.txt Delivery:

Using the Apache configuration to serve a domain-specific robots.txt file based on the request. This can be done with mod_rewrite rules similar to your redirects.

Test robots.txt Configuration:

Use Google's Robots Testing Tool to ensure your robots.txt files are correctly blocking or allowing access as intended.

Final Steps

Use tools like HTTP status code checkers, SEO site audit tools, and Google Search Console to verify proper setup.

Monitor and Adjust: SEO is an ongoing process. Monitor your site's performance in search engine rankings and adjust your strategies as needed based on analytics and search engine feedback.

This checklist provides a structured approach to setting up Apache redirects, canonical tags, and robots.txt files for SEO purposes. Tailor each step to your specific Drupal setup and domains to optimize your site's search engine presence.

(Create ECA Rule to set content paths based on "Affiliated Domain".)